Updated · Feb 11, 2024

Updated · Oct 25, 2023

Raj Vardhman is a tech expert and the Chief Tech Strategist at TechJury.net, where he leads the rese... | See full bio

Lorie is an English Language and Literature graduate passionate about writing, research, and learnin... | See full bio

The rise of AI and Language Learning Models (LLMs) have drastically reshaped the entire web space. OpenAI’s ChatGPT and Google’s Bard have risen massively in popularity, stretching beyond the tech applications.

However, training AI models can be a real chore. AI models require a lot of data to function properly. With 1.145 trillion MB of data produced daily, gathering valuable information is also challenging. Public datasets on the Internet have a lot of unusable data, and manually going through a bunch of sources is like finding a needle in a haystack.

This is where data extraction comes in—filtering and collecting essential information through a vast pool of sources. Dive deep into everything related to data extraction and how AI changes it forever.

|

📖Definition Data extraction is the process of “extracting” usable information from websites and other publicly available sources. Data collected from this process are often stored or analyzed for research. |

If you want to optimize your business with new strategies and offer better services or products at competitive prices, data extraction makes all that possible by allowing you to gain insights from hundreds of sources at once.

However, manually picking out functional data from stacks of documents, articles, social media posts, and other sources is not feasible. It can be time-consuming, filled with errors, and limited to specific sources.

That said, here are a few tools and processes that can help make data extraction easy:

Web scraping is one of the two common approaches to extracting data. This means gathering data from websites to generate easy-to-use datasets.

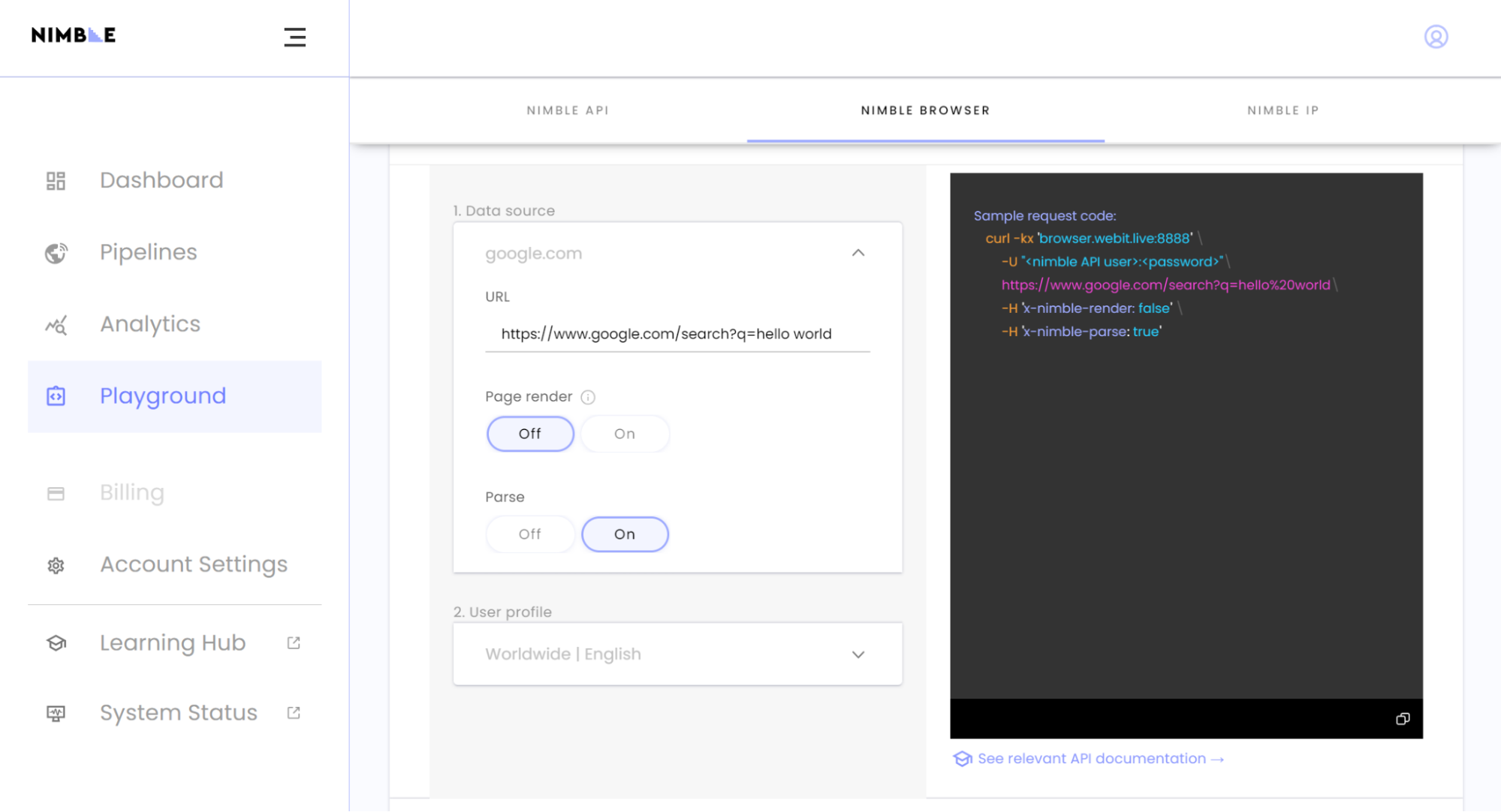

While the data quality and structure can be complex, scraping tools like the Nimble Browser and Python libraries make gathering usable information from dynamic webpages easy.

Proxy servers (or proxies) are anonymity tools that work between a user’s device and the Internet. A proxy lets you take a different IP address while browsing for a safer online experience.

Besides circumventing geo-restrictions for streaming or shopping, proxies can also be used to extract data. Programs like Apify and Nimble IP mask your actual IP address and bypass IP-based restrictions like CAPTCHAs or anti-scraping measures.

APIs provide a complete solution and eliminate the need for complex coded systems or programs. Tools like the Nimble API can integrate with your cloud storage service easily with auto-generated codes to make the process as beginner-friendly as possible.

|

✅ Pro Tip Always handle data extraction carefully. Follow all the rules and regulations of the websites to avoid any legal trouble. |

Most of the time, the extracted data from the tools and processes mentioned above are raw and unstructured. This means you must integrate parsing into the process to acquire usable data. Data parsing means converting the raw data into a readable format to easily create business insights and decisions.

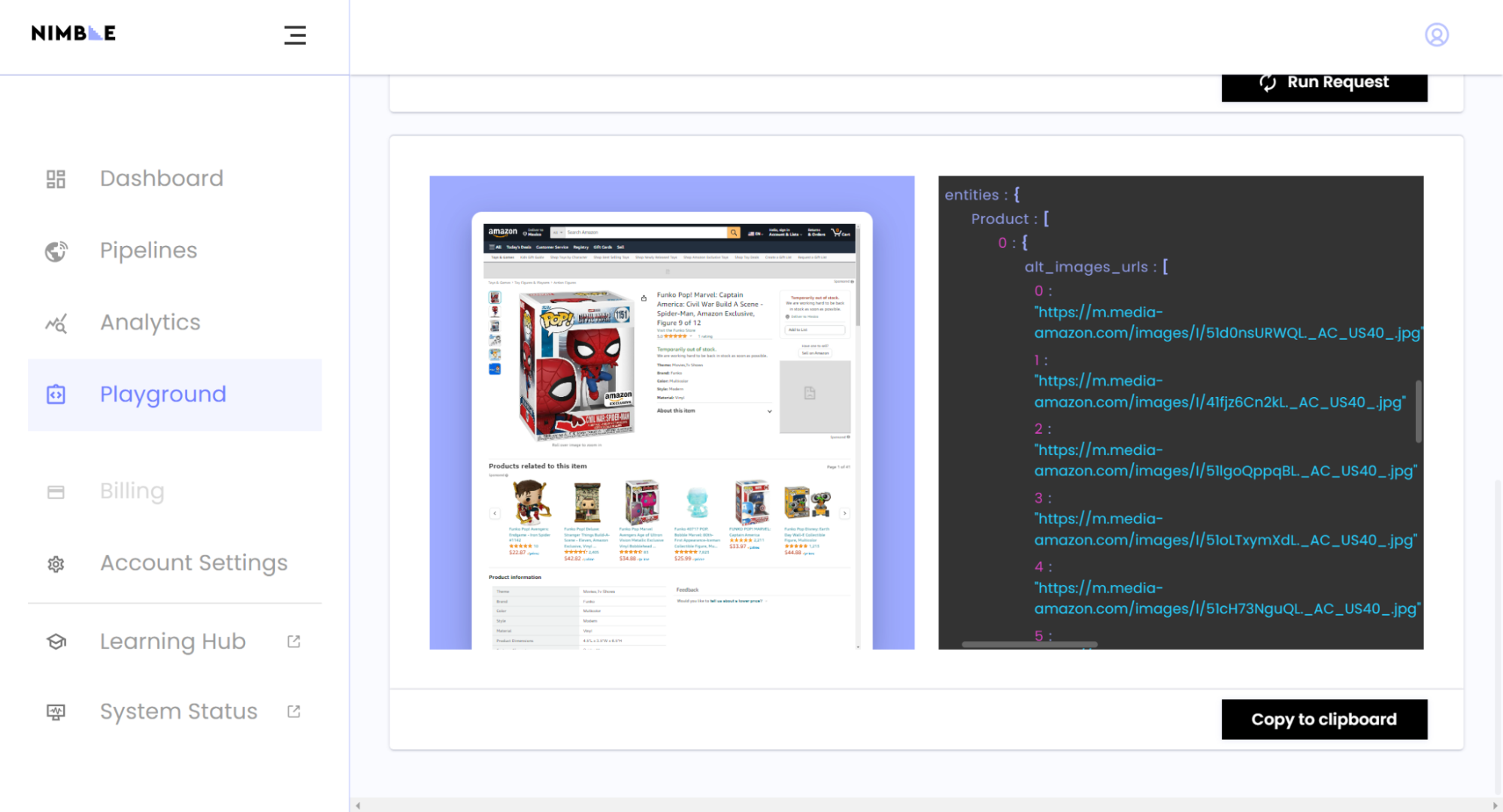

Data gathered from web scraping are commonly used for Machine Learning (ML), AI, e-commerce platforms, and more. That said, most of the data is in unstructured HTML format and needs to be converted to be usable.

Not to mention, web scraping is labor-intensive due to the scripting process and required quality checks. It also lacks any standardization, and every platform has its unique extraction method.

The only effective way to scrape data is by using data extraction tools. These tools bypass anti-bot measures and use AI-driven features to maximize the extraction process.

One effective tool is the Nimble Browser. It lets you scrape usable information, streamline the process, and manage the data sources all from a single dashboard. You can also access Nimble API and IP to extract information without restrictions.

Tools like the Nimble Browser use automation to industrialize the data extraction process. This improves the extraction rate, saving time and manpower.

Automation also allows the tool to break down complex datasets to provide real-time updates, making them highly applicable to large-scale data extraction operations.

AI plays a crucial part in the automation process and offers the following benefits:

|

💡 Did you know? The AI market size reached $136.6 billion in 2022. With the rise of AI in data analytics and other fields, experts say the market for the particular technology might reach $1,811.8 billion by 2030. |

Data extraction tools are applicable across many different sectors and not just research or tech. You can use data to empower your business strategies, develop better products or services, and even set competitive prices to ensure you’re ahead of your competitors.

Here are a few examples of industries that data extraction is necessary for:

Web scraping tools like the Nimble Browser can easily collect information from e-commerce platforms like Amazon or Walmart. This helps businesses improve their pricing strategy and product information to offer a better deal to their customers reliably.

Scraping also works for retail businesses as it lets them price their products and services competitively to grow and reliably expand their reach.

Social media and travel platforms offer an extensive range of data — from reviews, sponsored posts, pricing, and more. This helps you set competitive pricing options, develop new marketing strategies, and easily improve your customer experience.

It can even improve your offerings by optimizing and creating new services requested by the masses. Web scraping tools like the Nimble Browser can be of great use, allowing you to access a massive dataset reliably.

Many analysts and financial apps use AI-driven web scraping tools to predict market shifts, follow trends, and make investment decisions by extracting reports, stock market information, and economic indicators.

Web scraping tools automate all the data extraction processes and offer insights into how the market changes for better investment opportunities.

Social media polls, public documents, and general reviews from websites like Reddit, Instagram, and Google are all important research aspects essential for developing key strategies and optimizing your SEO presence.

This also gives academic institutions and researchers access to hundreds of information sources and datasets to contribute to advancing society and scientific knowledge.

|

👍 Helpful Article Being the only search engine to process more than 9 million search queries daily, Google is one of the best data sources. This means scraping it gives you a higher chance of obtaining valuable data. Check out this TechJury guide on scraping Google Search to learn how to extract information from the world’s biggest search engine. |

Property trends and statistics also help real estate agencies shape the property market. Scraping data like the current land prices, optimal structure area, and essential ownership rules and regulations can help companies market their listings effectively to buyers and dealers.

Web scraping tools like Nimble can effectively collect information from various sources and ensure agencies can track the evolving landscape to optimize their property listings better.

Using data extraction tools and APIs is easy. Just follow the steps below and get started with extracting data for your business:

Step 1: Set the requirements and goals for the data you want to extract.

Step 2: Choose the tools that you want to use. Nimble is a beginner-friendly option with a rich feature set and easy automation.

Step 3: Identify the data source. Ensure you follow the terms and conditions of the website you’re extracting the data from.

Step 4: Start the data extraction process on your tool.

Step 5: Copy the HTML code and incorporate it with your project.

Step 6: Structure and refine your datasets using tools like Python or Excel.

Step 7: Gain insights from the data and use it to optimize your business with the latest changes and trends.

Following the steps above, you can use data extraction techniques to boost your business growth.

Data extraction has always been complicated and challenging, but it is changing with AI. Automated web scraping tools, proxies, and APIs are changing the game and making data extraction beginner-friendly.

If you’ve been looking for AI-driven data extraction tools to optimize your business strategies, look no further than Nimble. It’s the one-stop solution to effectively scrape data from websites and ensure you a seamless extraction process.

It also uses automated scripting and proxy technologies that make the data extraction process accurate, efficient, and suited to your needs. Not to mention, AI is making great strides by offering pattern recognition technologies and improving cost-effectiveness, making Nimble a great choice to start scraping with. So, start your web scraping journey and start optimizing your business today.

Yes, anyone can use AI data extraction tools. These services are made for organizations, researchers, and people looking for effective methods to collect and analyze vast amounts of data.

Proxies serve as masks for your online persona. It lets you get around IP-based limitations and maintain anonymity when web scraping.

Yes, trusted AI tools ensure data security. To defend against unauthorized access and data breaches, most AI technologies prioritize data security by using encryption and complex proxy networks.

Your email address will not be published.

Updated · Feb 11, 2024

Updated · Feb 11, 2024

Updated · Feb 08, 2024

Updated · Feb 05, 2024